Building the MCP Server Capability: What It Entails

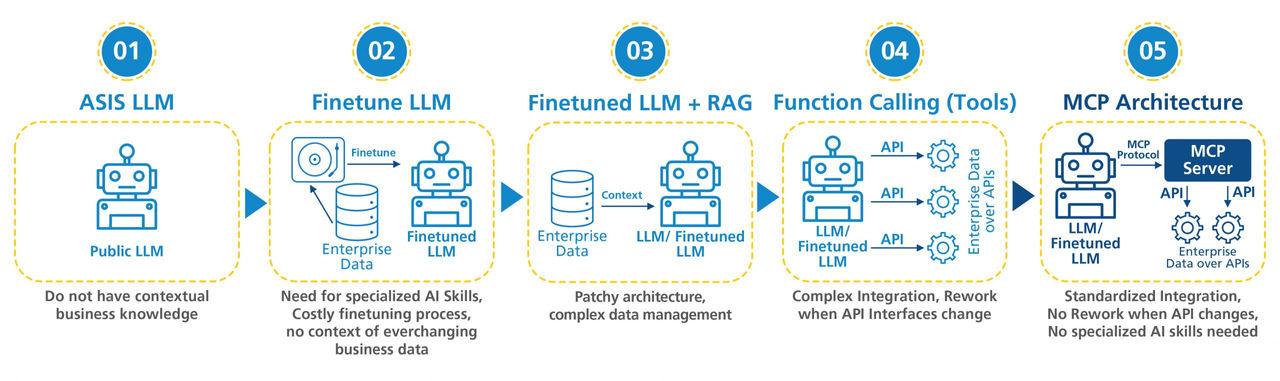

Today, there is a shift towards a broader architectural blueprint for enterprise-grade, context-aware AI solutions. Implementing MCP in these solutions inherently addresses significant challenges in the Agentic AI domain:

MCP establishes a vendor-neutral standard, ensuring seamless connectivity between models and tools, regardless of the platform or provider.

MCP facilitates scalable integration through modular, clean protocols, eliminating the need for fragile, one-off function calls.

MCP enables models to dynamically discover and utilize tools, underlying data, and capabilities, enhancing the adaptability and responsiveness of AI workflows.

MCP’s standardized interfaces and shared context layer streamline integration while preserving contextual continuity, enabling AI systems to deliver more accurate, relevant insights with reduced technical complexity

MCP enables secure, policy-driven access to enterprise data, ensuring adherence to regulatory requirements and internal governance, absoluerly crucial for enterprise-grade compliance and trust.

With off-the-shelf modular capabilities, MCP supports rapid adaptation to diverse enterprise use cases, accelerating AI adoption across internal teams, customers, and partners

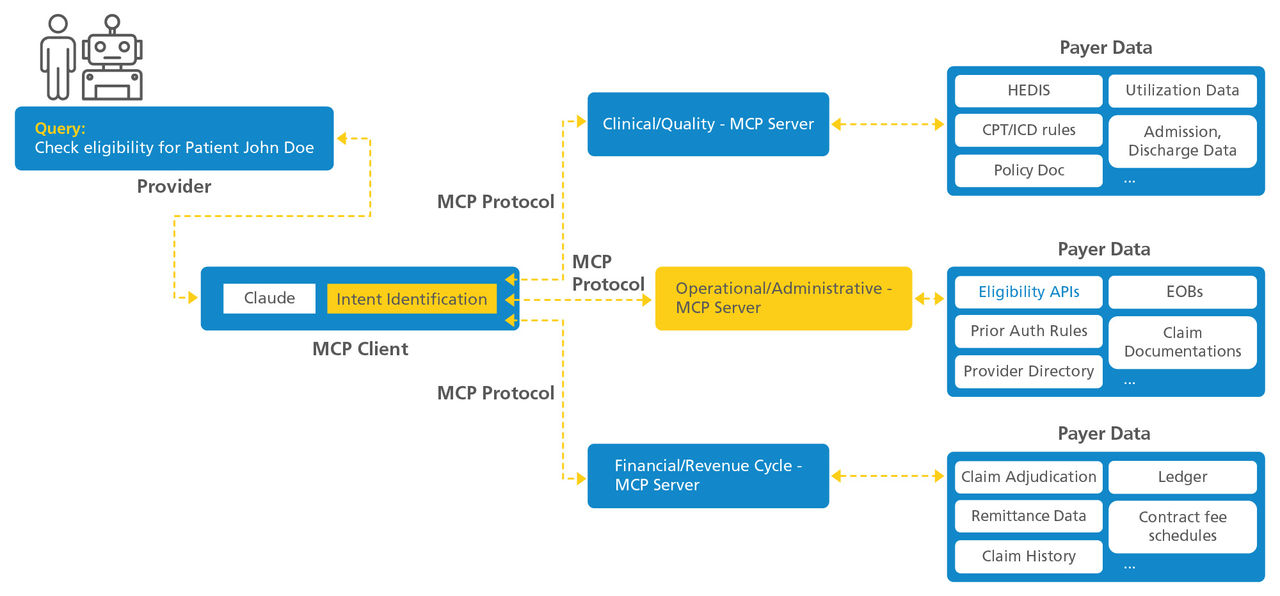

Establishing key capabilities within the MCP server is essential in the payer-provider ecosystem. This allows for the development of robust off-the-shelf capabilities ready to support a variety of use cases pertinent to both payers and providers. This helps organizations achieve greater efficiency, flexibility, and scalability while working with AI-based interactions and workflows.

To this end, we have categorized the MCP server for a payer organization under three heads, with suggestive tools catering to a variety of use cases.